MediatR versus Wolverine performance testing with K6

I have been using MediatR for some time now. In most of the architectures I’m currently setting up, I’m opting for a modular monolith with vertical slicing and CQ(R)S. MediatR is extremely helpful in keeping everything nice and decoupled.

However, there’s a (relatively) new kid on the block: https://wolverine.netlify.app.

I have always read and heard that the performance of MediatR can create quite some overhead. I haven’t run into a situation where it’s so impactful I need to worry about it, but it did make me curious: is Wolverine more performant than MediatR?

In this article I wanted to share some results for a basic performance test I did comparing MediatR versus Wolverine performance as mediator solutions for your codebase.

What is Wolverine

Wolverine is much more than just an alternative to MediatR. In their own words it’s a Next Generation .NET Mediator and Message Bus, a batteries included modern and opinionated library!. However, in this article, I will be sticking my fingers in my ears and shouting “LA LA LA I CAN’T HEAR YOU LA LA LA” while only focusing on the mediator part.

If you want to learn more about the other capabilities of Wolverine, I’d suggest checking out their website and subscribing to my newsletter. More about Wolverine is coming up in the future!

Setting up the tests

Here’s a very basic test setup:

- One web API: running in release mode

- Two Controller endpoints: one for MediatR and one for Wolverine, each with an integer as input

- Two query handlers: one for MediatR and one for Wolverine

For the actual test, I will be calling each endpoint repeatedly via HTTP GET to see how fast they are. That’s it! Each of the handlers does the same thing: return the integer it received as input, they just use a different mediator library.

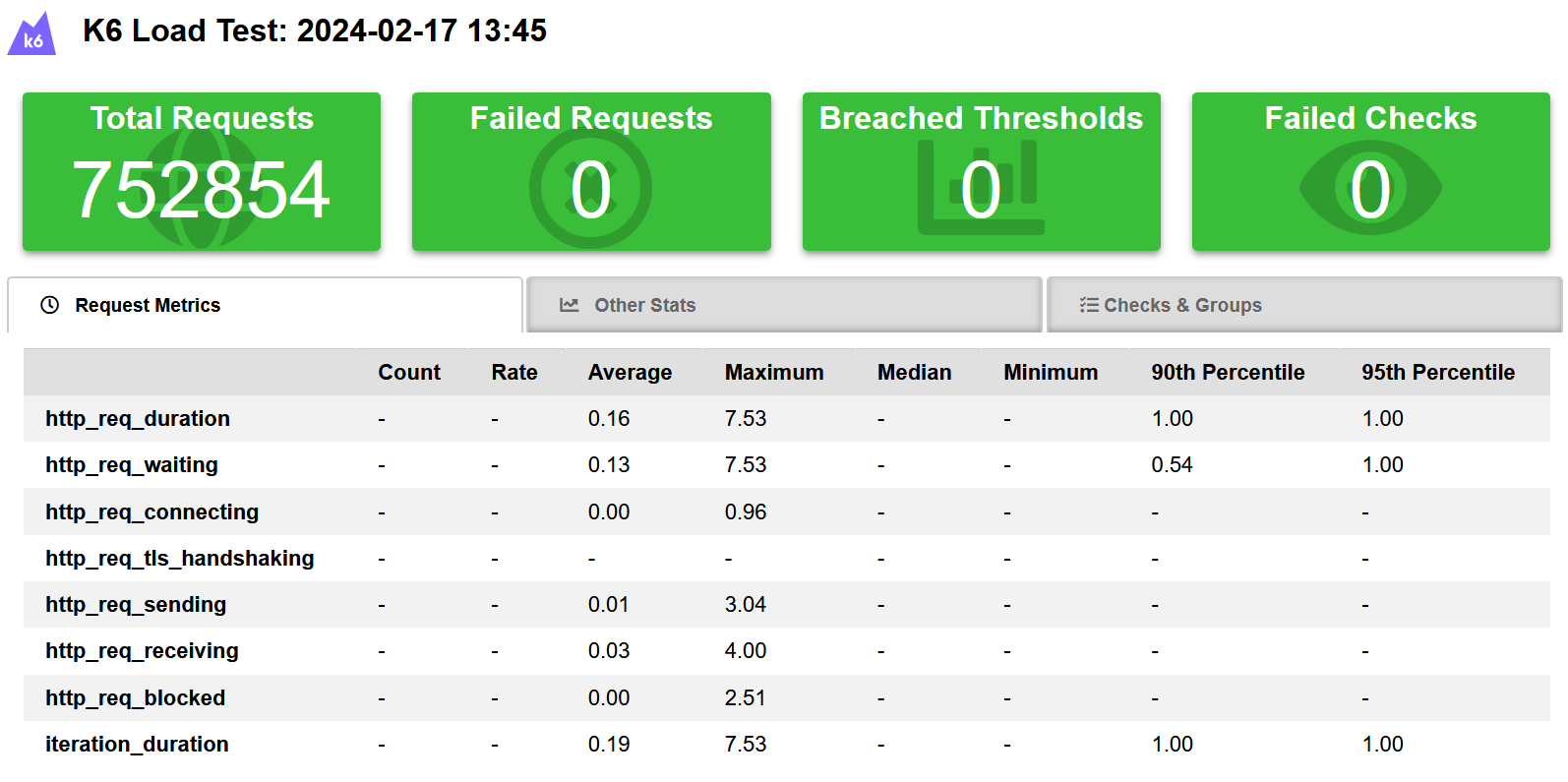

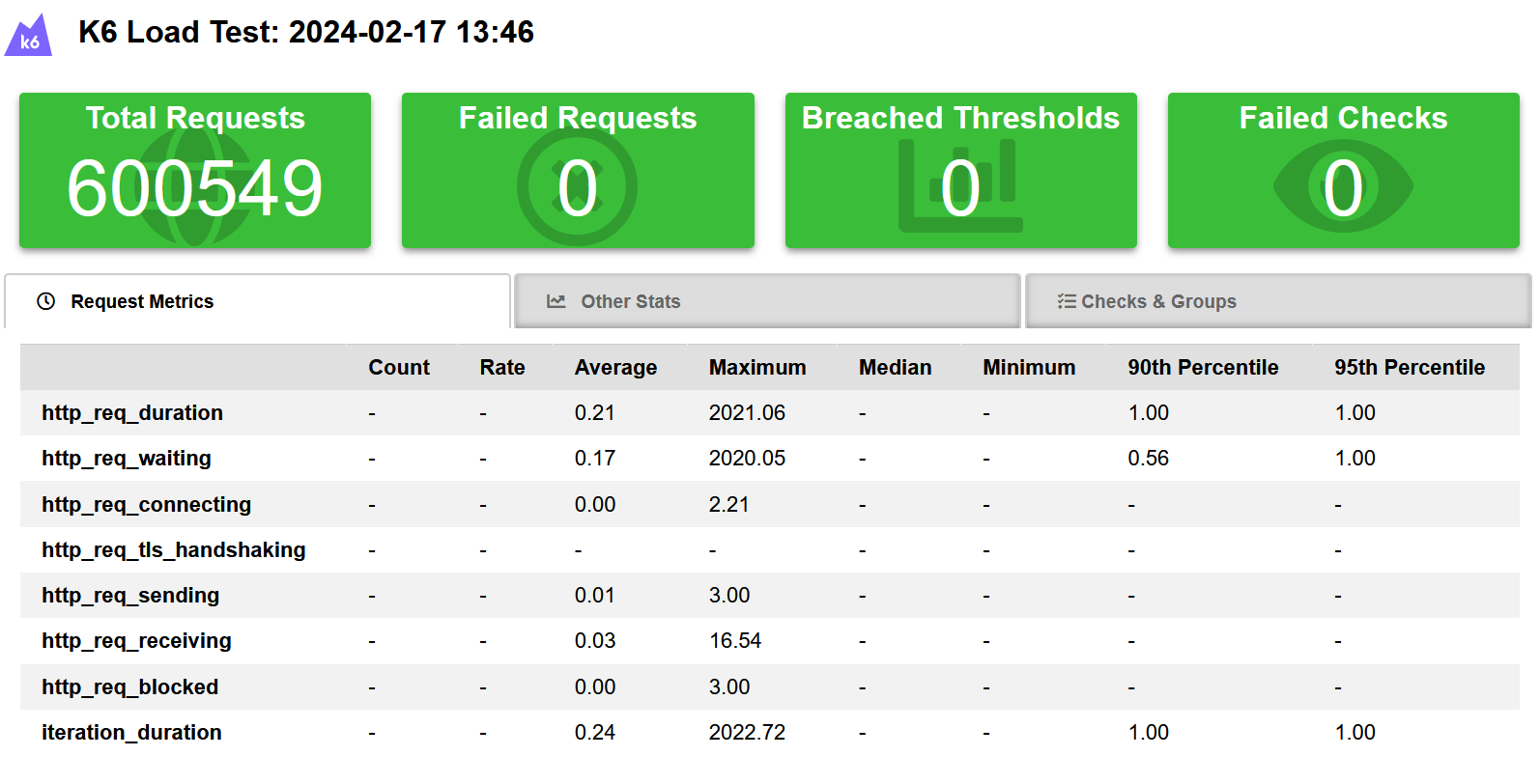

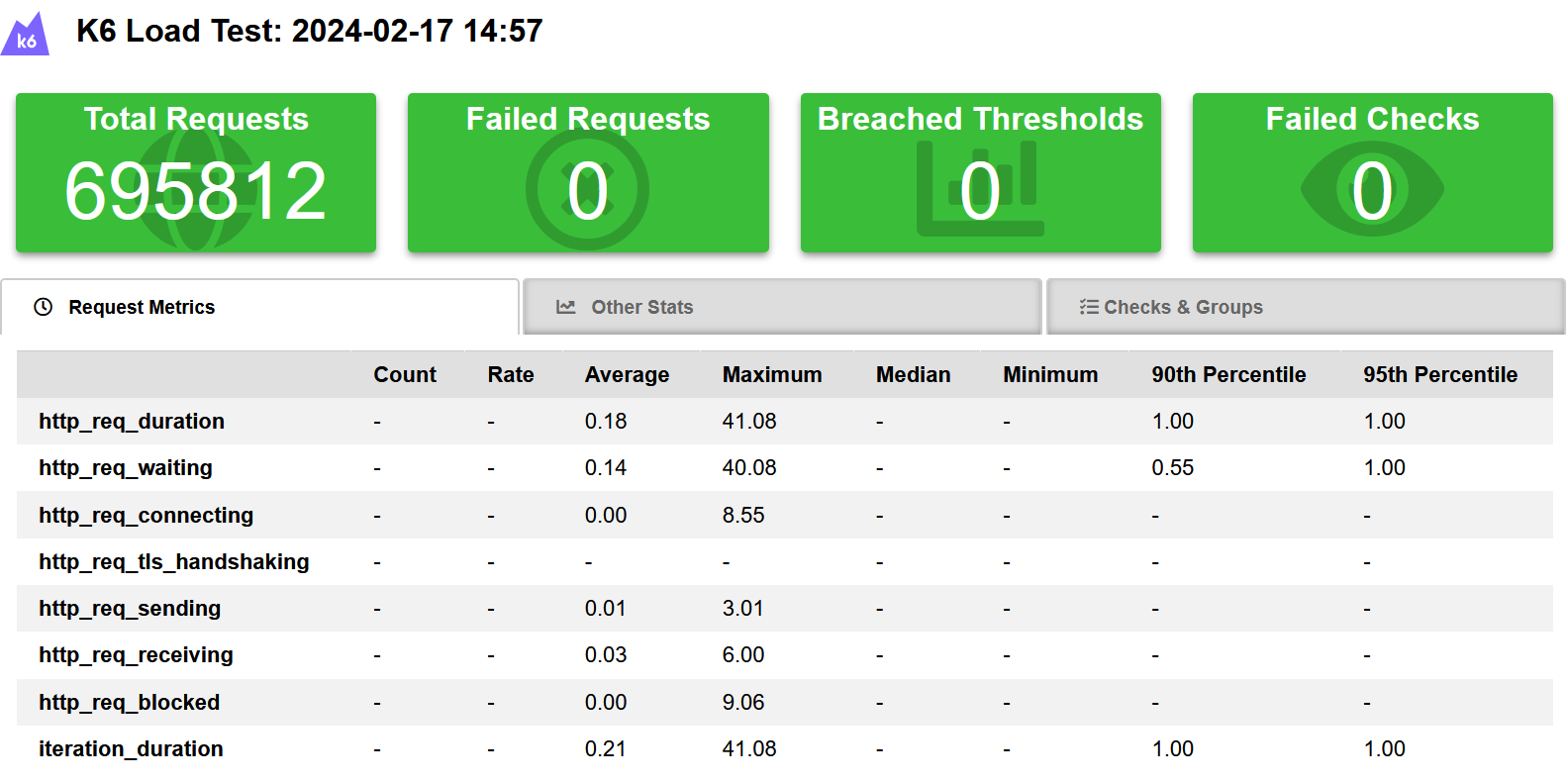

I’m using K6 (https://k6.io/) to run these load tests on my local machine, alongside my API. Each load test runs for 30 seconds, with 5 concurrent virtual users. Each test is also run separately to make sure they don’t influence each other. Finally, I ran each test multiple times to make sure there wasn’t a one-time issue that caused the numbers to be off. What you’re seeing here are the average results of ten test runs for each setup.

First test: naive approach

In the first test, I wanted to run both MediatR and Wolverine with the most basic setup. If you follow the Getting Started page for either of these libraries without reading any further, this is what you end up with.

MediatR

Wolverine

Looking at both of these tests, we can see that MediatR performs better. Something that stands out with the Wolverine test is that the max request duration is more than 2 seconds! This was actually to be expected because Wolverine uses code generation and the default setting will cause a slow cold start. Those 2 seconds are the very first call to the test handler.

Not a great start, but can we do better?

The second test: pre-generating code

When it comes to MediatR, there’s not much more we can do to improve performance. The strength of the library lies in its simplicity. But for Wolverine, we can do more!

As said before, Wolverine uses runtime code generation to create the “adaptor” code that Wolverine uses to call into your message handlers. Wolverine’s middleware strategy also uses this strategy to “weave” calls to middleware directly into the runtime pipeline without requiring the copious usage of adapter interfaces that is prevalent in most other .NET frameworks. You can read more about their code generation here: https://wolverine.netlify.app/guide/codegen.html.

In essence, it comes down to this: Wolverine has several configurable types of code generation:

- Dynamic mode: dynamically generates types on the first usage. This is the standard setting, which will result in a longer cold start. It doesn’t save any generated files to disk. Mostly only recommended for use when developing. It’s what we used in the first test.

- Static mode: expects the types to already be pre-generated. You’ll have to run

dotnet run -- codegen writeto generate the types before running your application, or else it will fail. Recommended for use in production environments, where a deploy pipeline can generate everything needed upfront. - Auto mode: will use pre-generated types already available. If nothing is available, it will automatically generate them and store them on your disk. It’s a best-of-both-worlds approach to the previous two.

So let’s assume we want to run our code in a production setting. I ran the command above, pre-generated my types and configured Wolverine to use static mode:

builder.Host.UseWolverine(opts =>

{

opts.CodeGeneration.TypeLoadMode = TypeLoadMode.Static;

});

Let’s see what happens now when we run our Wolverine test.

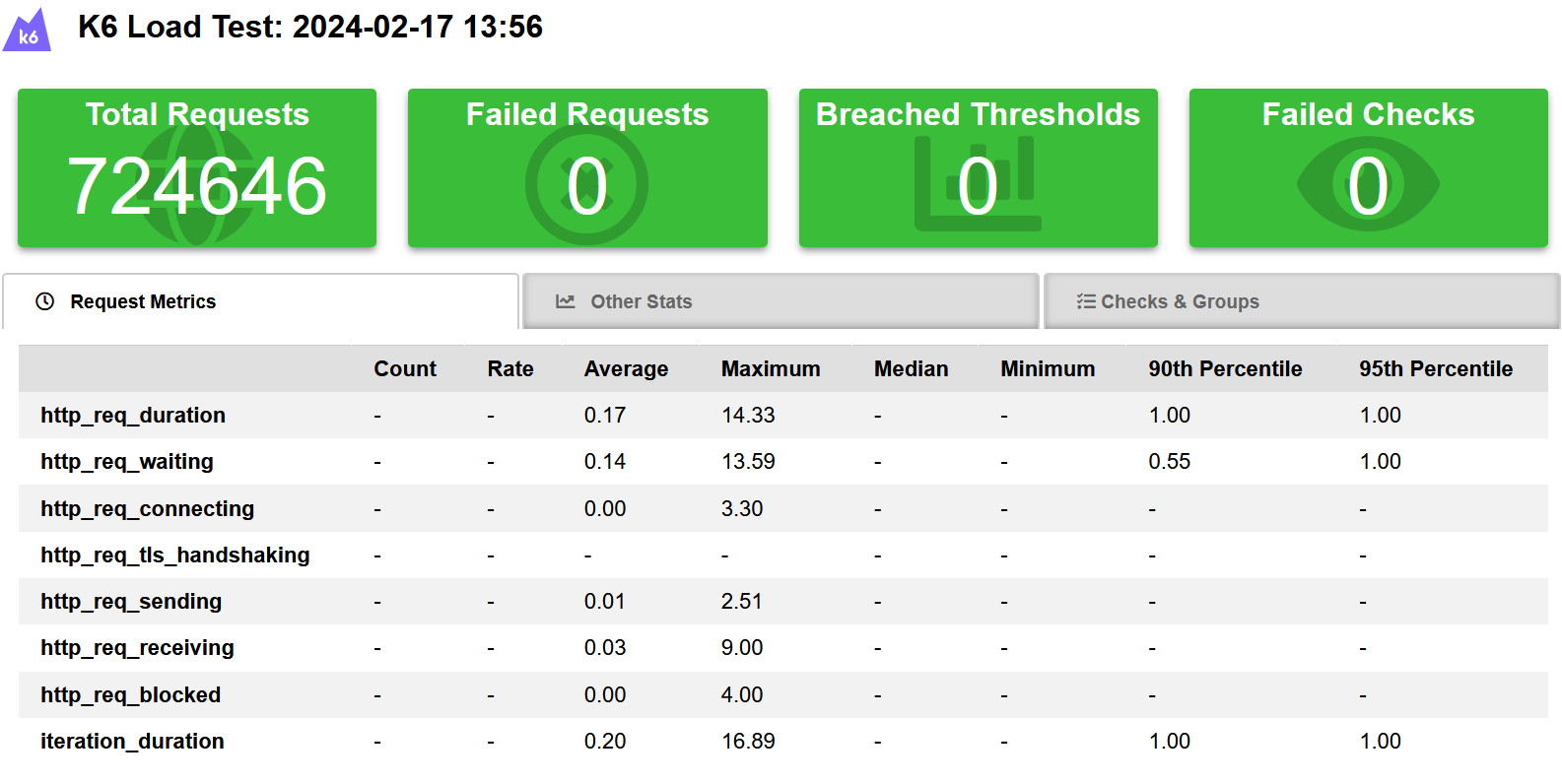

Wolverine with pre-generated types

Interesting! Wolverine hasn’t surpassed MediatR, but it’s a tie. Yes, there are fewer requests made to the Wolverine endpoint, but the average request duration (http_req_duration) only differs by 0.01 milliseconds. A trivial amount of time. So now we know Wolverine is at the very least equivalent in performance to MediatR as a mediator library.

But can we do even better?

The third test: trimming the fat

As I mentioned at the start of the article: Wolverine is more than just a mediator library. It has a bunch of really cool features for integrating with actual messaging systems like Azure Service Bus and RabbitMQ, it can keep track of outgoing and ingoing messages using a built-in outbox and input pattern, etc… Again, a bunch of cool stuff we’re just totally ignoring in this article. We just want to see how it measures up to MediatR as a mediator library!

So the next step is obvious: reducing the overhead of all those bells and whistles we don’t need. The people at Wolverine were kind enough to help us with this as well by providing us with more information in their documentation: https://wolverine.netlify.app/tutorials/mediator.html.

It comes down to a single line of code again in the configuration of Wolverine:

builder.Host.UseWolverine(opts =>

{

opts.CodeGeneration.TypeLoadMode = TypeLoadMode.Static;

opts.Durability.Mode = DurabilityMode.MediatorOnly;

});

This disables all the cool durability functionality included in Wolverine. Let’s see if anything has changed!

Wolverine with pre-generated types and mediator-only mode

Hmmm, strange. Things don’t seem to be getting any better. The average duration per request is again basically the same (only 0.02 ms variation). I guess I thought there would be some sort of impact, no matter how small. Is there anything else we can monitor?

Memory allocation

In this scenario, it also seems like memory allocation is the same. I did a few tests using DotMemory, but nothing interesting came from it. I just wanted to mention it here just in case, dear reader, it crossed your mind.

I have reached the end of my rope, so let’s wrap this up!

Conclusion

There’s not much point in switching from MediatR to Wolverine if all you care about is performance. If you’re picking a library right now that just needs to be a mediator, you can’t go wrong with both. Just remember to set that code generation mode correctly when using Wolverine.

However, Wolverine has a bunch more things going for it that make it an extremely interesting library, provided you need any of the extra functionality. If you just need a mediator right now but might need to integrate with an actual message bus down the line, then Wolverine is worth checking out. With all the included tooling that allows for building more future-proof applications, it could be a library that saves you a lot of time and makes your life as a developer significantly easier.

Thanks for reading! If you would like to stay up-to-date with my blog, consider subscribing to the codecrash newsletter. You'll receive an email whenever I publish a new article.